Veeam Backup & Replication Best Practice Guide

VMware vSphere proxy

Building a VMware vSphere proxy is a straight forward process that does not require any particular special care: it is a matter of few clicks in the Veeam Backup & Replication UI to add it.

However, in certain circumstances there might be some additional tweaks one may take into account to optimize the infrastructure.

Physical proxy

Windows

Physical proxy can be used with two different transport modes: DirectSAN and Backup from Storage Snapshots (BfSS). From an infrastructure point of view, the difference is mainly related to the way the proxy interacts with the source storage array.

In the Direct SAN method, the proxy must be zoned with the source storage and the LUNs containing the virtual machines to be protected have to be mapped, whereas with the BfSS only the zoning is required.

With that being said, it is easy to understand that there might be some risks related to the Direct SAN: since the LUNs containing the source VM are visible from the disk manager of the proxy and, in some cases, the proxy is also used as repository server, managing both local and SAN volume may be prone to human mistakes. Eliminating the risk of creating a new file system on the wrong volume (meaning the vSphere datastore) becomes key.

When deploying the datamover package, Veeam Backup & Replication disables both the Windows automount feature and remove the letter assigned to previously mounted drives (automount scrub) automatically. The volume is still mounted with read-write access.

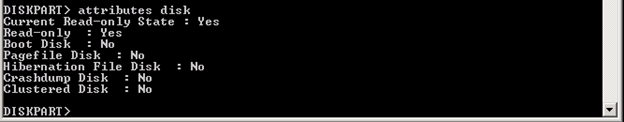

From a backup process, point of view of the read-write access is not required and DISKPART utility can be used to force a different access method and set the LUNs where the source VMs are running to read-only:

DISKPART> list disk

DISKPART> select disk x

DISKPART> attributes disk set readonly

DISKPART> attributes disk

The process has to be repeated for all the LUNs where a VMware vSphere datastore is created.

On the other hand, BfSS does not require this process since only the storage array’s snapshots are presented to the proxy. During the backup operations, a new volume will be visible from the Windows Disk Manager but it will never be the source LUN.

Linux

With Linux systems, ensure you have load balancing correctly configured and enabled. The system also needs to have the Open-iSCSI initiator enabled.

Note that multipathing for Linux-based backup proxies in Direct SAN access mode only leverage path failover and not load balancing.

Also ensure that the LUNs are not mounted or initialized using lsblk or multipath -ll.

For Direct NFS Access ensure the following packages are installed:

- NFS client package

- Debian-based:

nfs-commonpackage - RHEL-based:

nfs-utilspackage.

Restoring data in Direct SAN

Windows

Data restore through the SAN is possible only for thick VMDKs and usage of this mode forces Veeam Backup & Replication to restore in thick format.

In order to make the restore work properly, the target volume must be set back to read-write. Use DISKPART as follow to change the policy:

DISKPART> list disk

DISKPART> select disk x

DISKPART> attributes disk clear readonly

Considerations and limitations

Remember that several factors can negatively affect backup resource consumption and speed:

-

Compression level - It recommended to use Optimal compression in general.

High and Extreme come at the cost of additional CPU and RAM overheads at the cost of slower backup and restore.

However, if you have a lot of free CPU resources during the backup time window, you can consider to use “High” compression mode.

-

Block size - The smaller the block size, the more RAM is needed for deduplication.

For example, you will see a increase in RAM consumption when using 512 KB compared to 1 MB block size, and even higher RAM load (2-4 times) when using 256 KB.

Best practice for most environments is to use default job settings (1 MB for backup jobs and 512 KB for replication jobs) when no other is mentioned in the documentation or this guide for specific cases.

-

Antivirus - see the corresponding KB1999 for the complete list of paths that need to be excluded from antivirus scanning

-

Third party applications – it is not recommended to use an application server as a backup proxy.